Introducing Sim-1

From Intent to Impact

Traditional verification is a bottleneck. It is too slow for modern development cycles, and excludes the experts who best understand what a system should do.

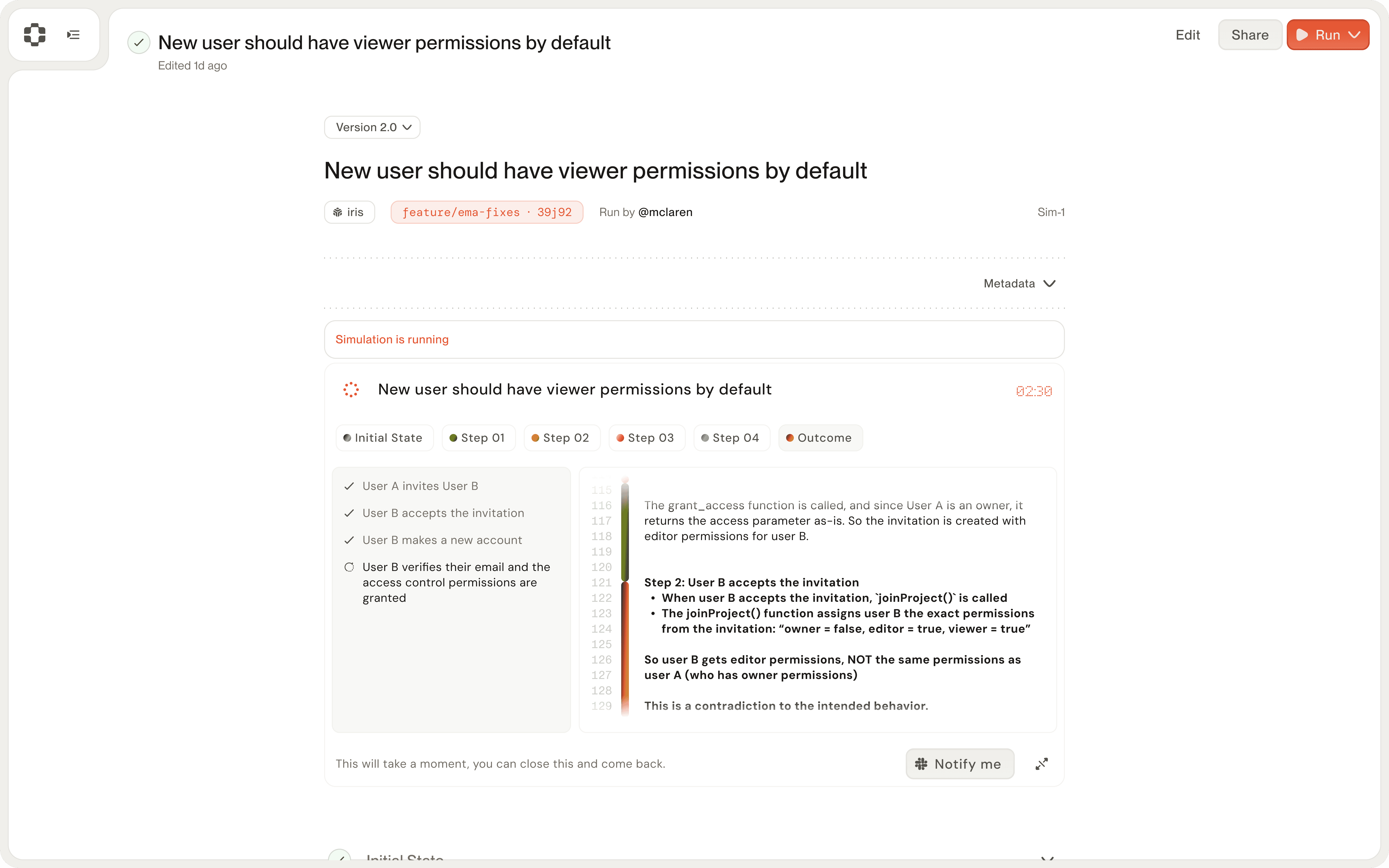

Sim-1 is different. It is powered by two concepts that redefine how we develop and verify code: Scenarios and Simulations.

Scenarios: Natural Language Meets Code Specification

Scenarios are system specifications written in plain English. They form a bridge between human intent and machine execution, allowing anyone to define and validate system behavior without writing a single line of code. In addition, Sim-1 can infer and automatically construct functional scenarios from codebases, tickets, and issues.

Because scenarios are written in natural language, they are resilient to code refactoring, serve as self-updating documentation, and can be reviewed by all product stakeholders, not just developers.

Simulations: Deep Behavioral understanding

Simulations are long-running analyses that trace a scenario's execution path across the entire system. Simulations translate high-level intent from scenarios into detailed execution traces. They verify whether or not a scenarios behavior would hold against a given codebase.

Sim-1’s simulations maintain coherence for more than 30 minutes, tracking state changes across dozens of service boundaries and hundreds of state transitions. Sim-1 explores multiple execution paths, calculates confidence scores, and provides interpretable traces showing precisely how the system’s state evolves at every step.

Democratizing Software Verification

The combination of scenarios and simulations enable Sim-1 to democratize the verification process. Product managers, analysts, and other domain experts can validate system behavior alongside engineers by writing and understanding high-level scenarios that encode the team’s tribal knowledge.

Redefining the Standards in Code Simulation

Sim-1 is an ensemble of models tuned to reason through execution traces in code — not a single, monolithic model. It is a system of specialized models and agents that work in concert to understand code, predict state changes, and maintain coherence over long traces.

We evaluated these Sim-1 components on real-world scenarios from production codebases. (Note: PlayerZero never trains our models on customer data.) Our evaluation framework measures Sim-1 across four critical dimensions: accuracy, scaling, code retrieval/understanding, and holistic system-level reasoning.

Simulation Quality & Accuracy

We evaluated Sim-1 on simulations run over 2770 scenarios derived from live, production codebases (note: PlayerZero never trains on customer data). We measure Sim-1’s ability to correctly predict whether a scenario would pass or fail against the current state of the codebase.

To establish a realistic benchmark, we sourced scenarios from real-world development: failing scenarios were based on active bug reports, tickets, and open pull requests, while passing scenarios were constructed from closed tickets and successfully merged pull requests. To quantify our ability to correctly identify scenarios that should fail, we evaluate accuracy, precision, and recall, where the target class is the correct identification of a failing scenario.

This process itself showcased the power of Sim-1. It not only performed with high accuracy, but also uncovered new bugs in code presumed to be stable. Sim-1 flagged verified failures on scenarios expected to pass. It didn’t just meet the benchmark, but exposed the blind spots inherent in traditional, manual validation.

Scaling

We benchmarked Sim-1 against codebases spanning a few thousand to over a billion lines of code.

Our research confirmed that code simulation follows predictable scaling laws. We found that doubling inference-time compute reduces error rates by approximately 40%. More "thought" leads to better reasoning. However, we reach a point of diminishing returns after about 30 minutes of simulation time. We find that 5-10 minute simulations provide 90% of the value at 20% of the cost, and set reasonable defaults accordingly. By default, Sim-1 is 10-100x faster than manual code review, and explores 50x more execution paths than typical test suites.

Code Understanding

The foundation of Sim-1’s reasoning is its deep, structural understanding of code. At its core, Sim-1 constructs a semantic dependency graph that maps both explicit and implicit relationships across the entire codebase — even when they span separate services or repositories.

This graph enables Sim-1 to trace the downstream impact of code changes by analyzing shared data structures, behavioral patterns, naming conventions, and communication protocols. As a result, Sim-1 is able to identify subtle connections that other tools miss.

When evaluated in isolation, the retrieval module built on this graph shows breakthrough performance, setting new state-of-the-art results on standard code retrieval benchmarks — such as CodeSearchNet MRR reaching 0.873 (previous SOTA: 0.792).

Holistic, System-Level Reasoning

While traditional models lose context over long sequences, Sim-1 maintains consistent state prediction across complex, distributed scenarios. In production deployments, we’ve observed unparalleled levels of:

Sustained Coherence: Sim-1 remains coherent in simulations lasting over 30 minutes.

State Consistency: Sim-1 achieves a 99.2% consistency rate across long execution traces.

Complexity Management: Sim-1 handles over 100 state transitions within a single simulation.

Boundary Crossing: Sim-1 seamlessly traces logic across more than 50 service boundaries without degradation.

Emergent Capabilities

While developing Sim-1, we observed powerful capabilities emerge that we did not explicitly design for. These behaviors point toward a surprisingly deep, generalized understanding of software principles.

Cross-Language Transfer

Sim-1 understands that behavior is independent of syntax. When presented with functionally equivalent code in different languages, Sim-1 identifies the behavioral similarity with 96% accuracy. This emerged without paired training data.

This ability is critical for code modernization projects. In one instance, Sim-1 correctly identified that a callback-based Node.js implementation and a modern Promise-based TypeScript version had identical state effects, despite their different programming paradigms. In another case, Sim-1 recognized that a sorting algorithm in Python had the same functional behavior as one written in Rust.

Temporal Reasoning

Sim-1 showcases a surprising understanding of temporal reasoning in asynchronous systems. It is able to track causality through complex system interactions by reasoning about:

Message queue delays and reordering

Database transaction isolation levels

Distributed cache invalidation patterns

Event-driven architectural flows

This emergent understanding leads to profound insights. In a striking example, Sim-1 correctly predicted a race condition that only manifests under a specific latency pattern across three microservices—an issue that would traditionally require hundreds of integration tests to discover.

Implicit Invariant Discovery

Sim-1 uncovers the unwritten rules governing system behavior. By analyzing code patterns, Sim-1 infers foundational truths about the system, such as:

Resource Safety: e.g., File handles are always closed by the function that opens them

Data Integrity: e.g., A user's balance must never go negative across any transaction type

Security Posture: e.g., All administrative actions require two-factor authentication

These discoveries make unwritten assumptions explicit, often surprising the teams that built the software. When presented these invariants, development teams confirmed that in 73% of cases, Sim-1 was identifying a business rule or condition that was otherwise undocumented.

Error Propagation and Degradation Prediction

Sim-1 understands system resilience. It reasons about the cascading downstream impact of isolated events such as:

Exceptions crossing service boundaries

Service timeouts

Partial failures in distributed transactions

Transient system failures

This allows Sim-1 to identify how errors propagate in scenarios like an exhausted database connection pool—pinpointing which APIs fail first, how retry logic creates backpressure, and which user-facing features degrade.

This capability was validated in practice when Sim-1 correctly predicted that a single timeout in a payment service would create a specific inventory inconsistency three services away—a dependency not obvious to a developer focused on a single service.

Architecture Pattern Recognition

Sim-1 uncovers a system’s underlying architecture, even when it deviates from the intended design. It automatically surfaces anti-patterns such as:

Hidden coupling between supposedly independent services.

Unintended "singleton" behaviors in a distributed environment.

Emergent bottlenecks from microservice communication patterns.

Implicit dependencies created through shared resources.

In one instance, Sim-1 identified two services, believed to be isolated, that were sharing state through a common Redis cache. This discovery exposed a hidden failure mode that had been dormant—and undetected—in production for years.

Safety and Trust

Building trust in AI-powered verification is our highest priority. To this end, we’ve subjected Sim-1 to rigorous testing including red team exercises, where experts attempt to make Sim-1 miss vulnerabilities, fault injection by testing with deliberately buggy code, and extensively comparing predictions against actual execution.

Every Sim-1 prediction is accompanied by a detailed, interpretable trace that links simulated states back to specific lines of code, assumptions, and alternative possible execution flows. The system maintains and propagates confidence scores for every prediction, and automatically escalates low-confidence scenarios for human review.

We are transparent about Sim-1's current limitations: it is challenged by highly non-deterministic or hardware-specific behavior, and can only reason about external systems based on observed patterns.

Available Today

We are beginning a phased rollout of Sim-1 today for all of our customers. Our developer preview is available with full support for all programming languages and integrations with GitHub, GitLab, Bitbucket, and Azure — along with ticketing integrations across 50+ systems like Jira, Zendesk, Salesforce, ServiceNow, and Azure DevOps for automatic scenario generation.

You can request access and start running your first simulation. Sign up for access at playerzero.ai/get-started

For additional technical details, research papers, and API documentation, visit playerzero.ai/docs.

As we rapidly scale our inference capabilities, we are on track to reach full production capacity in the coming weeks, followed by IDE integrations and enterprise deployment options by the end of the year.

What’s Next

Sim-1 represents a fundamental shift in our relationship with code. When we can reliably simulate behavior, verification is no longer a chore but a creative tool. We can explore ideas, secure legacy systems, and build with a confidence that was previously unattainable. The emergence of unforeseen reasoning in Sim-1 suggests we are just beginning to see the potential of AI that truly understands software.

This is a major step toward a future where the gap between business intent and technical implementation disappears, and software reliability reaches new heights through AI-powered simulation.

This is just the beginning. Join us in shaping the future of software development.