The Real Lesson from OpenAI’s Top Customers: Tokens Aren’t Spend. They’re Leverage.

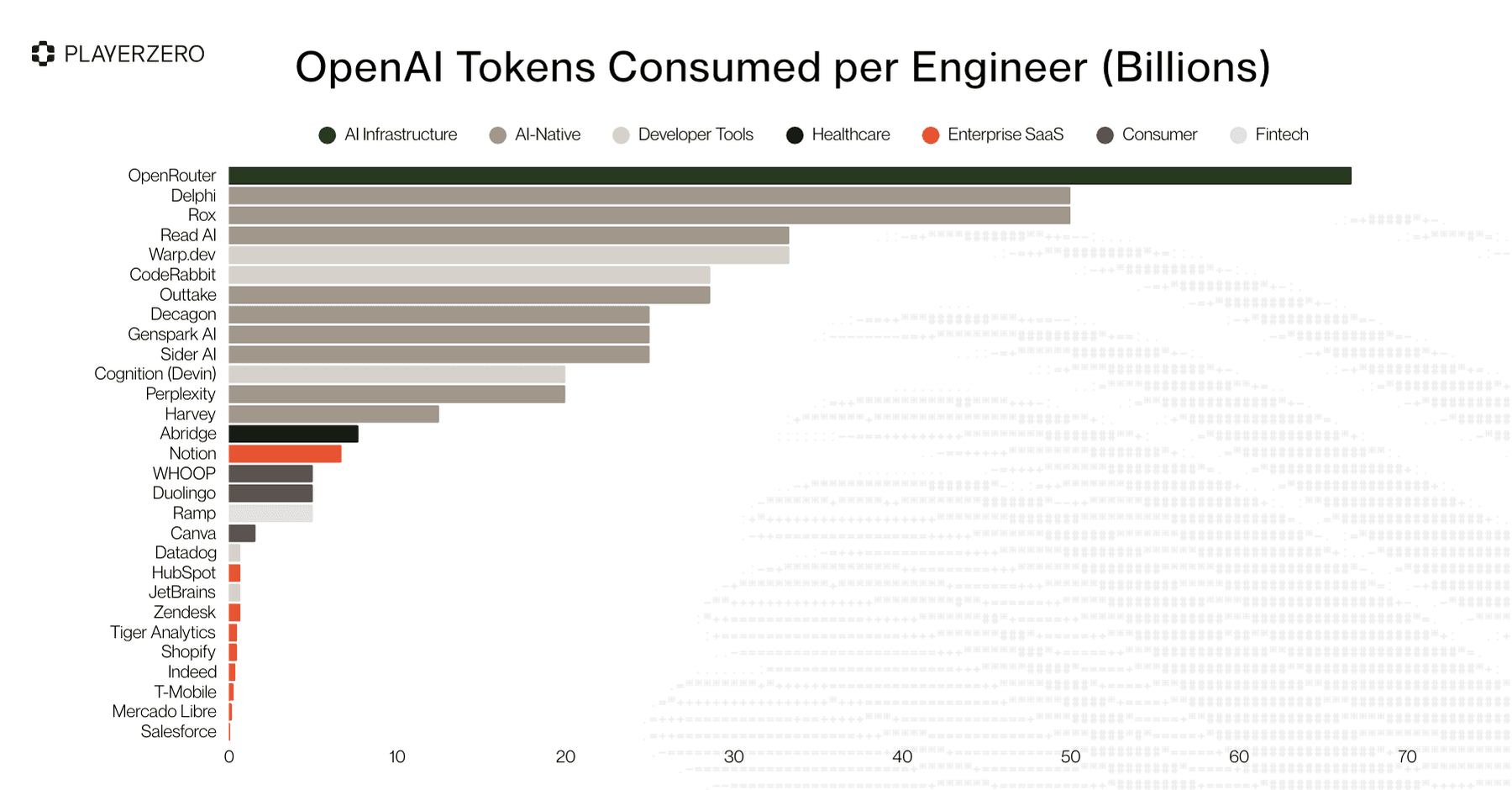

When OpenAI’s list of its top 30 customers by token consumption surfaced across social channels, the immediate reaction focused on who appeared on the list. But the more important insight came from the pattern: a mix of large, mature enterprises and fast-moving AI-native startups all consuming tokens at similar scales.

This wasn’t a leaderboard of experimentation. It was a snapshot of where cognition-heavy work is already being automated—and where AI has quietly become embedded infrastructure.

It’s also important to understand what this list does not capture. OpenAI token consumption represents only one slice of how leading organizations actually run AI in production. Many of the most sophisticated teams don’t concentrate usage in a single model or provider—they distribute workloads across multiple models and vendors based on cost, latency, context length, and task complexity. In that sense, this moment mirrors the early days of cloud adoption: the companies that extracted the most leverage weren’t the ones that picked a single hyperscaler, but the ones that designed systems flexible enough to evolve as infrastructure choices changed.

Even with that limitation, the list still reveals something essential. Across this group, token consumption correlates less with company size and more with how deeply AI is woven into real workflows. The organizations driving higher consumption are using AI to replace manual reasoning, not just accelerate isolated tasks.

That signals a deeper shift. Teams are beginning to measure leverage not by headcount, but by how much cognitive work can be offloaded to AI systems. The real competitive advantage comes from designing work so AI agents can operate as specialists—not simply as assistants.

The industry failed to anticipate the rate of AI adoption across sectors

Each of the top token consumers has different motivations and use cases. AI has become embedded inside real workflows that move organizations forward: the workflows that require reasoning, decisions, and customer-facing impact. That’s why it helps to break the pattern down by sector and by role to see where this shift is actually happening.

Sector-level patterns

The list revealed how broadly AI has already moved into production. Each sector uses large volumes of tokens for a different category of cognitive work:

Telecom uses AI inside real-time decisioning systems—intent routing, anomaly detection, agent assist—where latency and accuracy directly affect call outcomes.

E-commerce and fintech rely on reasoning-heavy pipelines: fraud scoring, policy interpretation, dispute mediation, document understanding (like KYC and invoices), and multi-step risk decisions.

Healthcare and education depend on long-context reasoning for summarization, tutoring, clinical documentation, and adaptive learning.

Developer tooling uses AI for code understanding, diff analysis, test generation, planning, and debugging—tasks with long dependency chains and complex reasoning paths.

CRM and enterprise SaaS integrate AI into search, ticket intelligence, customer insights, and internal knowledge flows that run continuously.

These workflows don’t look the same, but they share something important: they represent high-cost cognitive tasks that used to be bottlenecked by human attention, not compute.

Token-heavy workloads map directly to these categories—retrieval, reasoning, summarization, mediation, debugging—because each requires deep contextual understanding at scale.

Role-level adoption patterns

The cross-industry spread is only half the story. Inside companies, the roles that consume tokens are just as revealing:

Engineering leadership drives structural adoption by embedding AI into triage, code intelligence, risk detection, and other core workflows that touch the codebase.

Product and operations teams use AI within customer-facing experiences, creating always-on token usage as workflows run in production.

Founders and early engineers at AI-native startups architect their systems so agents own end-to-end workflows, not just isolated prompts.

Support leaders are increasingly using AI for ticket classification, triage, root-cause mapping, and response generation, massively compressing resolution time.

Across these roles, the same shift is visible: AI is no longer a layer on top of work; it is an operational backbone inside the work.

This diversity isn’t noise—it’s a clear signal. The organizations consuming the most tokens are delegating meaningful cognitive work to AI systems thousands of times per day, across functions and across the stack.

How token consumption reveals a newly leveled playing field

A closer look at the top token consumers reveals something more interesting than a startup-versus-enterprise divide.

What’s actually happening is a structural leveling of the playing field.

Generative AI is doing for software what cloud infrastructure did a decade ago: removing a category of constraint that once favored incumbents. Just as startups no longer needed to build their own data centers to compete, they no longer need massive teams of specialists to reason across complex systems, analyze failures, or iterate quickly on customer feedback.

The result is not simply faster execution—it’s a shift in who gets to compete.

New entrants can now operate with the same cognitive surface area as much larger organizations, because AI absorbs the work that used to require scale: context gathering, cross-system reasoning, analysis, and synthesis. Tokens, in this sense, are not about “who runs more AI,” but about how much cognitive terrain a team can cover.

AI-native startups aren’t automating workflows—they’re reinventing them

AI-native startups aren’t just doing existing work faster. They’re questioning whether the work needs to look the same at all.

Because AI sits at the center of their architecture from day one, these teams aren’t constrained by legacy assumptions about how problems are solved. They’re free to reimagine entire workflows—not by building a better version of the same process, but by designing fundamentally different ones.

In practice, this means:

Products that assume continuous reasoning, not discrete handoffs

Systems that learn as they operate, rather than relying on static rules

Workflows designed around exploration and iteration, not rigid pipelines

This is why small teams can now rival the output and impact of much larger organizations. It’s not that AI has “replaced” humans—it’s that AI has removed the historical penalties of being small.

High token consumption in these teams is a byproduct of this shift. It reflects constant exploration, reasoning, and iteration embedded directly into product and engineering processes.

Key takeaway: AI-native startups gain advantage not by automating humans out of the loop, but by escaping the constraints of how work used to be done.

Enterprises face a different—but equally important—opportunity

Enterprises approach AI from a different starting point. They carry existing systems, processes, and organizational structures that can’t be rewritten overnight.

As a result, most enterprise AI adoption today focuses on augmentation:

Faster investigation and triage

Better visibility across complex systems

Reduced manual effort in analysis and coordination

This isn’t a limitation—it’s a strategic reality.

Augmentation allows enterprises to unlock meaningful gains without destabilizing core systems. And when done well, it enables teams to operate at a scale and level of complexity that would otherwise be unmanageable.

Where enterprises risk falling behind is not in how much AI they use, but in whether they treat AI as a surface-level efficiency tool or as a way to fundamentally expand what their teams can reason about and act on.

Key takeaway: The competitive gap isn’t between startups and enterprises—it’s between teams that use AI to rethink how problems are solved and those that use it only to optimize existing workflows.

What tokens actually signal

Seen through this lens, token consumption is not a proxy for “AI taking over work.”

It’s a signal of how much cognitive work an organization is able to engage with—how many scenarios it can explore, how much context it can reason over, and how quickly it can adapt.

That’s why tokens per employee matter more than raw volume. It reflects how much leverage each person has, not how automated the organization is.

The real transformation isn’t AI execution versus human execution. It’s constraint removal versus constraint preservation—and that’s the shift reshaping competition across software today.

Why token consumption matters—and why tokens per employee is the real metric of leverage

The leaderboard serves a useful purpose: it shows which companies are running the most AI workloads. But total token consumption alone doesn’t tell you whether that usage is valuable, efficient, or strategically sound. A company can burn millions of tokens without changing how it operates—or deploy billions in a way that fundamentally reshapes how work gets done.

For engineering leaders, the more revealing question isn’t how many tokens the organization consumes. It’s how effectively tokens amplify human judgment and execution. The goal isn’t to replace people with AI, but to increase how much meaningful work each person can responsibly orchestrate through AI systems. That’s where durable outcomes show up: lower MTTR, more stable releases, faster iteration, and better customer experiences without linear headcount growth.

Tokens as cognitive work

Tokens aren’t abstract units—they’re the atomic measure of machine-executed cognition. Each token represents a small unit of reasoning, retrieval, synthesis, comparison, or decision-making performed by AI for humans.

In practice, token-heavy workflows map to work that was historically expensive and slow:

Multi-step reasoning across systems

Context gathering and grounding

Code analysis and debugging

Synthesis of fragmented signals into a decision

When these workflows are well-architected, token consumption correlates more closely with delivered value than with raw activity. The system isn’t “thinking more” for its own sake—it’s removing cognitive bottlenecks that previously constrained teams. This shows up as shorter delivery cycles, smoother handoffs, and fewer delays caused by manual context gathering or analysis.

Tokens per employee as a measure of leverage—not maximization

Total token consumption tells you how much work the system is doing. Tokens per employee reveal how work is distributed between humans and AI—and whether that balance is healthy.

More tokens per employee aren’t always better. Too few, and teams remain constrained by human bandwidth: decisions pile up, context is fragmented, and progress slows. Too many, and organizations risk letting AI make decisions without sufficient human oversight, increasing the chance of subtle errors, misalignment, or downstream risk.

The most effective teams operate in a sweet spot of AI–human leverage:

Humans set intent, constraints, and accountability

AI handles the heavy cognitive lifting at scale

Decisions remain explainable, reviewable, and grounded

This is why tokens per employee is a better diagnostic metric than raw token volume. It reflects whether AI is being used to responsibly amplify human capability—not just automating for automation’s sake.

At that balance point, teams consistently see:

Higher throughput per engineer

Faster issue resolution without sacrificing quality

Systems that scale without proportional increases in cost or risk

This dynamic is what drives what we refer to as the great flattening: smaller teams achieving impact that previously required far larger organizations—not because AI replaced people, but because it absorbed the most cognitively expensive parts of the workflow.

Why this reframe matters for engineering leaders

Viewing tokens through the lens of leverage rather than cost gives leaders a more straightforward way to assess AI maturity. The organizations seeing the strongest returns aren’t optimizing for token minimization or maximization—they’re optimizing for effective AI–human collaboration.

When that balance is right, improvements compound: customer-facing issues are resolved faster, releases stabilize, and teams gain confidence to move quickly without increasing operational risk. These outcomes create a direct line between AI adoption and business performance—and give leaders a practical benchmark to evaluate progress over time.

Tokens aren’t the price of experimentation. They’re the operating fuel of a new way of working—one where leverage comes from how intelligently AI and humans share the cognitive load.

The shift toward AI-native workforces is creating new engineering challenges earlier than expected

Once AI stops being an experiment and becomes a core executor of work, the entire engineering system comes under pressure in ways that traditional scaling models never predicted.

AI-generated changes move faster than human review cycles. Agentic workflows introduce new dependencies and edge cases. And the pace of iteration increases not because teams grow, but because each engineer now orchestrates 10–100× more cognitive work through AI.

In other words: the moment AI starts running real production workflows, the old assumptions about pace, QA, and reliability break.

The challenges listed below are what companies at the high end of token consumption are currently facing.

Accelerated iteration pressures

When AI-driven code changes, experiments, and decisions continuously flow into production, familiar problems surface much earlier than they used to. Rapid iteration creates failure modes that previously only appeared at massive scale:

More regressions

Higher defect escape risk

Greater strain on integration points

Increased variance as AI-generated changes introduce novel edge cases

Issues that once required huge user bases or massive traffic now appear even in small teams—because throughput is no longer tied to headcount. AI accelerates delivery beyond what legacy QA, review cycles, and guardrails were designed to handle.

Complexity of agent-driven interactions

As soon as agents begin owning end-to-end tasks, they depend on accurate, current system context to reason correctly. When that context is incomplete or static:

Reasoning chains break

Cascading failures compound across services

Debugging becomes exponentially harder because traces span multiple systems and decision layers

Agents behave differently from humans—they don’t “work around” missing context or ambiguity. That means gaps in system understanding surface as reliability issues almost immediately.

Gaps in traditional QA and triage

Most QA, triage, and debugging workflows were built for human-driven change velocity, not autonomous or semi-autonomous systems. As AI-generated updates increase:

Manual triage becomes a bottleneck

Evidence remains siloed across teams

Support and engineering teams struggle to maintain shared context

Handoffs slow down resolution

These bottlenecks aren’t a sign of poor engineering—they’re a sign that the environment has changed. AI-native velocity exposes weaknesses in traditional toolchains and processes far earlier than expected.

These challenges are not edge cases—they are structural outcomes of AI taking on real cognitive work in production. The companies consuming the most tokens are simply encountering them first—and showing that mature AI adoption demands new infrastructure, practices, and ways of working.

Infrastructure-heavy AI consumers now need reliability at scale

As AI moves into the critical path of core workflows, the companies consuming the most tokens are discovering a painful truth: traditional observability and QA aren’t built for continuous machine reasoning.

Engineering teams must shift from “debugging code occasionally” to engineering reliability for nonstop, autonomous decision-making. These capabilities are the foundations required to operate AI at scale without sacrificing stability.

Unified system understanding

AI-driven systems need a complete, code-grounded view of how software behaves in production—a single model that connects repos, telemetry, user sessions, tickets, and logs.

When analysis is anchored directly in the codebase, AI can reason accurately about failures, dependencies, and user impact—eliminating hallucinated conclusions and accelerating triage dramatically.

Predictive reliability controls

With AI-generated changes flowing constantly, reactive reliability is no longer enough.

Proactive safeguards such as automated regression detection, high-risk change identification, and early-impact signals before users feel degradation.

This shifts engineering from discovering issues late to preventing them early—critical when iteration speed outpaces human review cycles.

Knowledge democratization

As workflows become more distributed and agent-driven, knowledge can no longer live in the heads of senior engineers. Auto-generated architecture maps, cross-service dependency insights, and self-service debugging context remove the dependency on institutional knowledge. This also enables junior engineers to resolve complex issues without constant escalation.

Modern quality and debugging infrastructure

Continuous AI-led change introduces failure patterns that old debugging workflows can’t absorb. Modern reliability loops require code‑anchored evidence, centralized cross‑system context, reduced tool fragmentation, faster root‑cause analysis, and fewer repeat regressions.

Together, these create a feedback system that adapts to the velocity of AI, not the velocity of human-driven development.

What engineering leaders can learn from the companies consuming the most tokens

For leaders, the lesson from the top token consumers isn’t “use more AI.” It’s that leverage comes from how work is structured—and how responsibility is shared between humans and AI over time.

The organizations getting outsized returns don’t flip a switch and hand everything to agents on day one. They start with humans firmly in the loop, use AI to absorb the heaviest cognitive load, and then deliberately reduce human intervention as workflows prove reliable, explainable, and repeatable. Everything else—lower MTTR, faster releases, fewer regressions—flows from that progression.

Across these organizations, a few patterns show up consistently.

Design workflows where responsibility shifts gradually

High-leverage teams don’t treat AI as a sidecar or a magic replacement. They design workflows where:

Humans define intent, constraints, and success criteria

AI executes bounded tasks with clear guardrails

Oversight is explicit at first, then relaxed as confidence grows

Over time, agents move from assisting on isolated steps to owning larger portions of the workflow—but only once outputs are trustworthy and failure modes are well understood. This is how AI becomes an executor safely, not recklessly.

Build leverage—not experimentation

The most effective teams measure progress by outcomes, not novelty. Early on, humans remain deeply involved while teams track whether AI is actually creating leverage:

Are resolution times shrinking?

Are defects escaping less often?

Is each engineer able to oversee more work without losing control?

As AI systems demonstrate consistency, teams intentionally reduce manual touchpoints—freeing humans to focus on higher-order decisions instead of routine analysis. Tokens per employee become useful here not as a goal to maximize, but as a signal that AI is absorbing the right kind of work at the right pace.

Prepare for reliability challenges before they force your hand

Teams consuming the most tokens learned early that AI adoption isn’t a feature upgrade—it’s a shift in operating model. As AI takes on more responsibility, failure modes surface faster and on a larger scale.

The leaders who navigate this well invest early in:

Systems that predict and prevent failures, not just explain them after the fact

Shared, code-grounded visibility across engineering, support, and operations

Debugging workflows that make AI decisions inspectable and reversible

This ensures that as human intervention scales down, trust scales up—without sacrificing reliability.

How PlayerZero supports organizations operating at this new scale of AI adoption

PlayerZero is not simply another AI tool provider—it operates using the same patterns as the top AI-consuming companies themselves. Its platform reflects deep AI integration, using meaningful token volume to model, reason about, and execute cognitive workflows that would traditionally require specialized engineers.

At its core, PlayerZero’s agents are designed to mirror how real teams work:

They own outcomes as part of a closed-loop process, not isolated tasks.

Agents handle end-to-end triage, regression detection, and code-level reasoning—the same workflow a senior engineer would perform, just at machine speed. And they document their findings in systems of record, just like a human engineer.They model real cognitive workflows instead of responding to prompts in isolation.

By grounding analysis directly in repos, changes over time, logs, telemetry, memories, and user sessions, PlayerZero can reason about issues with full system context.They help teams scale AI adoption safely.

Teams start with human-guided analysis, then gradually move toward more autonomous workflows as AI outputs prove consistent and reliable. The result is more proactive issue detection, shorter learning cycles, and stronger reliability as organizations accelerate development.

For enterprise engineering teams, the impact shows up quickly—faster issue resolution, fewer customer-facing incidents, more stable releases, and higher throughput without adding headcount. AI projects also reach time-to-value faster because the surrounding reliability system can keep pace with AI-driven velocity.

This pattern plays out across customers like Cayuse, a research management platform with more than 20 interconnected applications and a highly fragmented multi-repo architecture. Before PlayerZero, they relied on slow, reactive workflows to resolve customer issues. With PlayerZero, the team identifies and fixes 90% of issues before they reach the customer. Time to resolution dropped by 80%, junior engineers began handling investigations independently, and high-priority ticket volume declined—resulting in a noticeably smoother customer experience.

Cayuse’s transformation reflects a broader pattern: when AI-driven triage and root-cause analysis sit inside core engineering workflows, teams gain real operational leverage—measured in speed, reliability, and customer outcomes—not just in token consumption.

What engineering leaders should take away—and where to go next

The list reveals a shift toward AI-driven work, where agents execute cognitive tasks with human oversight. The real metric to watch is tokens per employee, a proxy for how much work each person can offload and how quickly teams can deliver.

Meaningful AI adoption isn’t about experimentation—it’s about redesigning work so AI becomes a true executor, not just an assistant.

For engineering leaders navigating large-scale AI adoption, the real value shows up in metrics like velocity and operational efficiency. The next step is clear: enable AI-native workflows safely—without trading speed for stability.

Explore how PlayerZero helps teams scale AI adoption while maintaining reliability and customer trust.