7 Strategies for Accelerating Developer Onboarding with AI

Developer onboarding has quietly become one of the biggest productivity drains inside modern engineering teams. Picture this: a new engineer joins a mid-sized team, opens their IDE, and is immediately confronted with dozens of interconnected services, unfamiliar patterns, and documentation that trails months behind the product.

What’s supposed to be an exciting first week becomes a scavenger hunt—clarifying assumptions, guessing at code paths, and trying to reconstruct architectural intent from fragments spread across repos and tribal knowledge.

Meanwhile, senior engineers lose hours each day rerouting questions, revisiting decisions made years ago, cautiously reviewing early pull requests, and helping new hires build mental models from scratch. These interruptions stack up. Velocity drops. Quality slips. Customer satisfaction suffers. And morale on both sides takes a hit.

This guide shows how to break that cycle with AI-powered onboarding workflows that get new engineers productive faster—without increasing the load on your most valuable talent.

Why developer onboarding is failing—and why current approaches no longer work

Software teams often treat onboarding as either a cultural challenge (“pair new folks with senior engineers”) or a documentation challenge (“write everything down and hope it stays current”). But modern engineering complexity and velocity has outgrown both approaches.

The real problem is structural.

It stems from the three forces that shape every engineering organization today: people, process, and context. You may be used to hearing the phrase “people, process, technology” but in today’s AI-driven era, context is more critical than technology.

Each one is under strain—and together, they create onboarding bottlenecks that legacy methods can’t solve.

People: a resource strain that compounds with scale

In most engineering organizations, senior developers inevitably become the go-to experts for every question: architectural decisions, debugging guidance, reasoning behind edge cases, deployment nuances, and ownership boundaries. This isn’t intentional—it’s a function of experience accumulating in a small group. A classic knowledge silo.

But the downstream effect is predictable: constant interruptions, context switching, decision fatigue, slower output, and eventually burnout.

As teams grow, especially in enterprise, multi-product, or PE-backed companies, the expert-to-engineer ratio compresses. A handful of senior engineers support dozens of others. Institutional knowledge becomes harder to access yet more essential. New hires slow down the experts, knowledge bottlenecks slow the roadmap, and the strain compounds sprint after sprint.

Process: workflows that can’t keep pace with modern codebases

Traditional onboarding relies heavily on synchronous knowledge transfer: shadowing, pairing sessions, Slack back-and-forth, and ad hoc “walk me through this” meetings. These methods are most effective when systems are small and change slowly.

But they don’t scale in distributed, fast-evolving architectures.

Documentation can’t keep up either. The moment it’s written, it starts aging. New services, refactors, and integrations change context faster than any wiki or handbook can be updated. New developers quickly learn that the “official” onboarding materials describe how the system used to work—not how it works now. When they get stuck, they escalate the question… right back to the senior engineers whose time you’re trying to protect.

AI-generated code accelerates the problem. It increases development velocity but introduces unfamiliar abstractions and patterns that no single engineer fully understands. The codebase grows faster than knowledge can be shared.

Context: systems too complex for human-only onboarding

Modern software is no longer a single repo, a linear stack, or a predictable request/response system. It’s an interconnected network of distributed services, asynchronous jobs, event-driven pipelines, and multi-cloud infrastructure. Teams juggle microservices, schema evolution, third-party APIs, and telemetry scattered across tools.

Key system behaviors—how requests flow, where failures originate, how errors surface across services—are rarely documented in one place. Even experienced engineers struggle to trace these flows clearly.

As the codebase evolves and becomes more interconnected, the context gap widens. New developers must understand how the system behaves today, and how it behaved six months ago. But no amount of traditional onboarding can keep pace with architectures that change weekly.

Why AI-native onboarding is now essential for engineering teams

Legacy methods can’t reliably keep pace with fast-growing or frequently changing environments.

New AImodels that live in your codebase, can understand your code, your services, your telemetry, your commit history, your architecture.

Instead of relying on institutional knowledge stored in the minds of overloaded experts, AI surfaces accurate system context on demand. AI-native onboarding:

Provides developers with a safe space to ask foundational questions without fear of judgment or interrupting senior engineers.

Shifts senior engineers from “knowledge bottlenecks” to “curators of system context,” reducing the cognitive load on senior engineers.

This frees experts from repetitive training processes and enables them to focus on higher-value work: solving complex problems, improving architecture, and shaping future systems.

Where AI accelerates onboarding: step-by-step playbook for faster developer ramp-up

Modern engineering systems operating in complex production environments generate more code, decisions, and context than humans can practically transmit during onboarding. AI becomes essential—not because it automates onboarding, but because it gives new developers the context that used to belong exclusively to senior engineers. That edge case that only pops up once every 6 months that only Bob knows how to solve? Your AI system remembers it too, and knows how Bob solved it the last three times. That one customer that has a unique implementation that Susie helped roll out? The AI understands all the nuances of the implementation.

The tactics below target a specific friction point, showing how AI replaces slow, manual knowledge transfer with system-level intelligence that scales.

1. Give new developers instant architectural clarity

New hires often struggle to form a clear mental model of the system. They spend days navigating outdated diagrams, partial documentation, and scattered Slack threads before they understand how services actually connect.

AI-generated architecture maps, dependency graphs, and sequence flows provide an accurate, up-to-date view of how the system behaves today. Developers see the full up-to-date topology immediately, rather than constructing it manually through trial and error.

Shrinks the “cold start” period from days to hours.

Reduces foundational questions and early missteps by showing upstream/downstream dependencies upfront.

How to get started: Generate an architecture or dependency map for one frequently touched service. For example, a new developer updating a billing endpoint can instantly see which services call it, what it triggers downstream, and what side effects to consider—collapsing days of exploration into minutes.

Signal it’s working: New hires can walk through a core system flow confidently within their first few days.

2. Replace tacit knowledge with semantic code understanding

Much of senior engineers’ most valuable knowledge exists only in their heads—design intent, edge cases, historical decisions, and reasons behind architectural tradeoffs. New developers spend a huge percentage of onboarding time searching through repos and Slack trying to reconstruct this missing context.

Semantic understanding surfaces relationships between files, usage patterns, ownership history, and cross-service connections directly from the code. New hires enter discussions with stronger pre-context and more focused questions.

Reduces senior interruptions because developers escalate fewer basic questions.

Improves question quality—new engineers arrive with relevant context already explored.

How to get started: Have the new hire run semantic queries on a module they’re assigned to—looking at its related modules, upstream integrations, ownership history, and usage patterns before touching the code. This surfaces accurate connections and intent immediately, so new hires have a clear starting point before asking for help.

Signal it’s working: Seniors report fewer context-setting conversations and more high-quality questions.

3. Turn debugging into a guided learning path

Debugging is where new developers lose the most time. Without historical context or intuition, every issue becomes a guessing game—digging through logs, hopping between services, and reconstructing flows manually.

AI-guided debugging analyzes traces, logs, historical fixes, and dependency chains to highlight likely root causes and relevant code paths. Instead of guessing, new developers follow a structured path toward understanding the real failure.

Reduces time wasted on unproductive debugging routes.

Teaches system behavior through real production incidents, not theoretical documentation.

How to get started: Guide new hires through a recent incident using automated trace reconstruction. For example, an OAuth failure can be replayed step-by-step—request initiation, validation, provider response, and failure origin—turning a multi-hour hunt into a clear learning sequence.

Signal it’s working: New devs can resolve or narrow down an unfamiliar bug independently within their first month.

4. Use real production behavior to accelerate intuition

Static documentation can’t teach developers how real users behave. New hires struggle to understand edge cases, unexpected inputs, or multi-step flows because they only see idealized versions of the system.

AI surfaces reconstructed user sessions, flow summaries, and behavioral anomalies, giving developers a realistic view of how customers interact with the product—what succeeds, what fails, and what creates confusion.

Builds product intuition weeks earlier than traditional onboarding.

Helps developers anticipate user needs and edge cases before they ship code.

How to get started: Review a handful of real user flows, like a failed checkout, onboarding dropout, or multi-step form completion, automatically reconstructed from telemetry. These real sequences teach more than any spec.

Signal it’s working: New hires start referencing real user behavior in design and implementation discussions.

5. Increase contribution confidence with AI-powered PR guidance

Early pull requests are stressful for new developers—they’re unsure about dependencies, conventions, or unintended side effects. This slows reviews and makes new engineers overly cautious.

AI-assisted PR analysis highlights risks, missing tests, dependency impacts, regression likelihood, and style inconsistencies before a PR is submitted. Developers receive actionable feedback instantly, rather than waiting for review cycles.

Shortens feedback loops and increases the number of PRs new hires can ship.

Reduces regressions by catching issues earlier in the process.

How to get started: Have new hires run AI analysis on their first PRs before requesting review. They’ll see immediate, concrete improvements in what they submit.

Signal it’s working: Early PRs require fewer review cycles and ship with fewer regressions.

6. Use simulations to teach system-level reasoning

Distributed systems make it hard for new developers to understand how their changes ripple across services. What looks like a minor update can unintentionally break flows in distant parts of the system.

AI-powered simulations show how changes propagate through real flows—checkout, onboarding, notifications, data syncs. Developers see architectural consequences and dependency chains upfront. This is all done in memory, no test infrastructure required.

Builds early intuition around service interactions and hidden dependencies.

Prevents regressions by exposing downstream effects before code is merged.

How to get started: Simulate one common flow that frequently breaks or touches multiple services. For instance, changing a notification handler can reveal impacts on onboarding, billing, and security messages that aren’t obvious from reading code.

Signal it’s working: New devs proactively run flow simulations and identify risks before review.

7. Build a living onboarding guide through automated knowledge capture

Documentation ages the moment it's written, especially in fast-changing systems. New hires quickly learn not to trust it, which slows down the onboarding process.

AI automatically captures knowledge from debugging sessions, merged PRs, regressions, production patterns, and resolved tickets—turning engineering activity into continuously updated onboarding material.

Keeps onboarding accurate because documentation reflects the current system.

Creates compounding value: every fix enriches the onboarding experience.

How to get started: Enable automated capture for a common issue type—errors, regressions, recurring questions from new hires. Over time, this grows into a rich system-specific knowledge base.

Signal it’s working: New developers rely on the knowledge base first, not Slack or senior engineers.

How to measure onboarding success with AI-powered workflows

Adopting AI-assisted onboarding isn’t just about speed—it’s about making progress measurable. Teams that shift to system-aware AI workflows often focus on the following indicators:

Ramp-up speed:

How quickly can developers ship their first PR? Their first multi-point story? Their first independent feature?

Independence:

How often do new hires interrupt senior engineers? How quickly does that dependency drop?

Context access:

How quickly can developers find relevant logs, code paths, tickets, or user sessions?

Quality:

Are defect rates, regressions, and time-to-resolution improving?

Velocity:

Does PR throughput increase? Are deployment cycles shortening? Is backlog churn decreasing?

Experience:

Do new hires feel more confident, autonomous, and clear on how the system works?

Org-wide alignment:

Are engineering, product, support, and QA collaborating more smoothly with shared context?

These signals and metrics reveal whether onboarding is scaling—or whether teams are still relying on heroics.

How PlayerZero enables AI-native onboarding

Once you understand the onboarding challenges and the role AI can play, the next question is: what does it look like to actually implement these strategies within an engineering organization?

PlayerZero implements each of the tactics above by grounding AI in your actual system—your repos, telemetry, logs, sessions, commit history, and architecture. Instead of generating generic suggestions, it builds a continuously updated model of how your software behaves, then applies that model across onboarding workflows.

Here’s how each capability maps directly to the playbook:

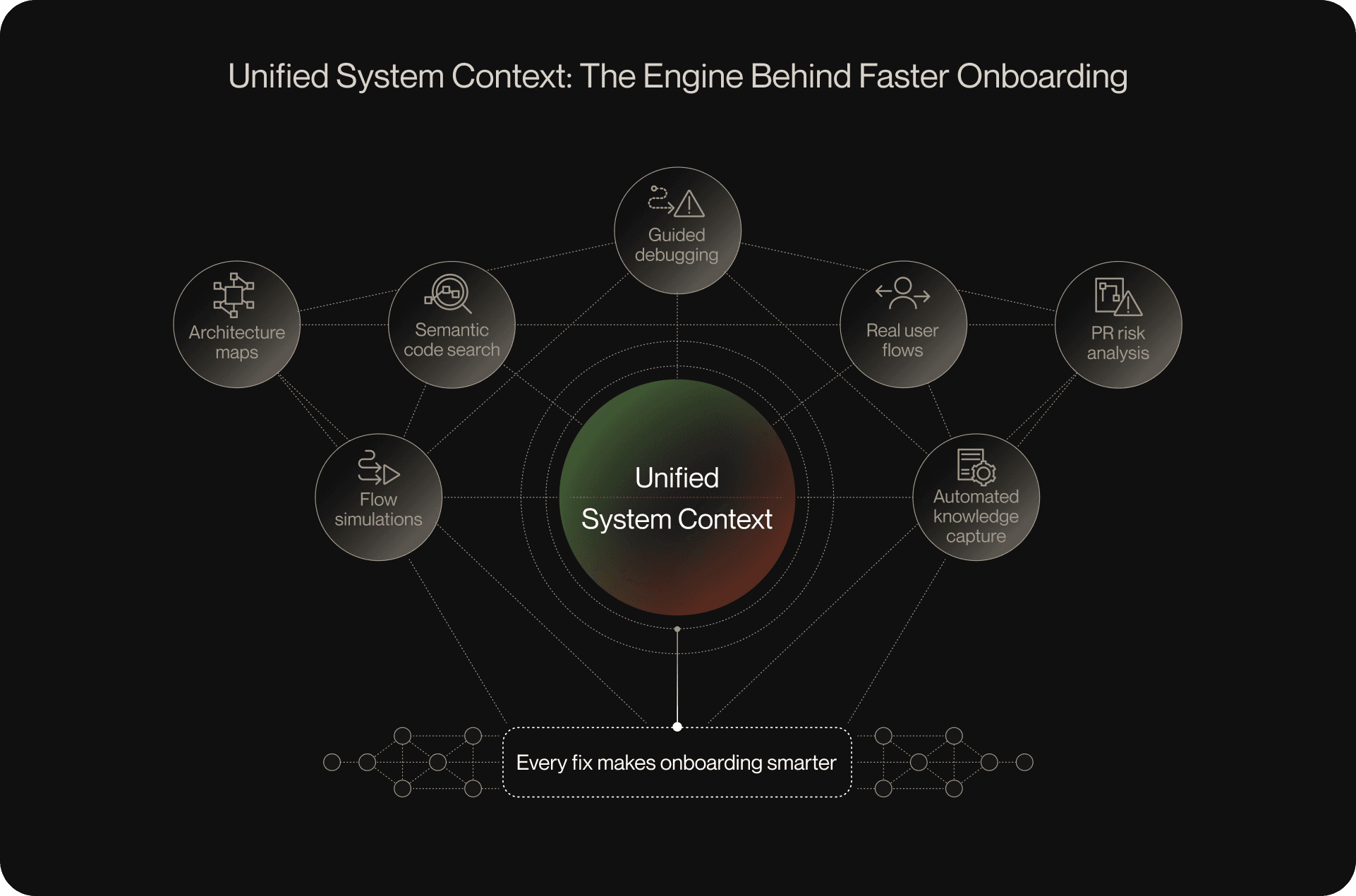

Unified system context: Connects repos, telemetry, logs, session data, and tickets, so new hires can trace requests end-to-end without bouncing between tools or escalations. Architectural clarity becomes instant rather than detective work.

Semantic code search: Surfaces ownership, intent, relationships, and usage patterns from real code behavior and commit history. This reduces the dependency on tacit knowledge and gives new hires the necessary context before asking senior engineers for help.

Agentic debugging: Reconstructs real failure paths using logs, traces, and historical fixes, turning debugging into a guided learning path instead of a multi-hour scavenger hunt. New developers learn how the system actually behaves faster and with fewer interrupts.

PR analysis: Summarize what’s in the pull request. Highlights risk, dependency chains, affected components, missing tests, and regression likelihood—helping new hires submit higher-confidence PRs earlier, with fewer review cycles.

Simulation engine: Analysis of the most likely problems that might occur in production followed by an in-memory walk through to predict behavior after a code change–without running the actual code. New hires develop system-level intuition by visualizing dependencies and ripple effects before they write or review code.

Automated knowledge capture: Turns debugging sessions, PRs, regressions, and user sessions into continuously updated, searchable documentation. Because documentation is grounded in the actual code, new hires always learn from the current state, not stale artifacts.

System-aware AI agent: Answers questions with verifiable, traceable, code-grounded reasoning. Developers can ask:

“What breaks if I change this?”

“Which services rely on this endpoint?”

“Show me all historical fixes for this pattern.”

…and get accurate answers linked directly into the system.

And these capabilities don’t just work on paper—they meaningfully reshape onboarding inside real teams. For example, using PlayerZero’s unified context and agentic debugging, Cayuse identified and resolved 90% of issues before they reached customers, reducing their time-to-resolution by 80%. Their developers ramped faster because they weren’t waiting on senior engineers to reconstruct incidents or explain historical context.

Key Data saw a similar impact. By combining PlayerZero’s semantic code understanding, PR analysis, and session-based debugging, they reduced replication cycles from weeks to minutes. They moved from weekly releases to multiple deployments per week—giving new hires a clear path to early, independent contribution.

These results highlight the core value of AI-native onboarding: faster ramp-up, fewer expert bottlenecks, and a workflow where new developers can contribute with confidence from day one.

Your next steps for building an AI-powered onboarding workflow

Developer onboarding breaks when teams try to scale it with people, meetings, and documentation alone. AI is now the most reliable way to overcome friction points, giving new developers the system context they need without increasing the workload on senior engineers.

A practical place to start is choosing one onboarding workflow where context gaps slow developers down the most—whether that’s architectural understanding, debugging unfamiliar services, or preparing PRs with confidence. Introduce an AI-assisted workflow in that area and measure what changes: fewer interrupts, faster ramp-up, or clearer mental models.

Once that foundation is in place, expand into adjacent workflows where system-level context has the biggest impact. And the value isn’t limited to engineering—AI-powered context dramatically accelerates onboarding for support teams diagnosing issues, product managers understanding system behavior, and even go-to-market teams who need clarity on how features actually work. The same underlying system intelligence becomes a shared onboarding layer across the entire organization.

The teams that see the fastest results are the ones using an AI platform that understands their codebase and runtime behavior end-to-end, not just generating answers in isolation.

If you want to see how this looks in practice, book a demo to see how PlayerZero accelerates developer onboarding and strengthens engineering velocity.